Three reasons why pacemakers are vulnerable to hacking

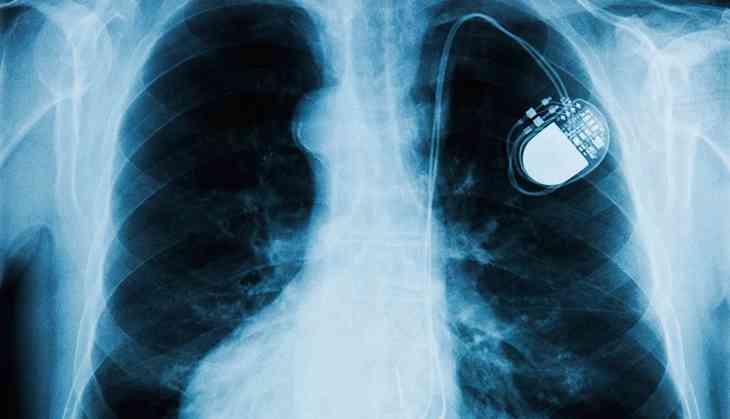

The US Food and Drug Administration (FDA) recently recalled approximately 465,000 pacemakers made by the company Abbott’s (formerly St. Jude Medical) that were vulnerable to hacking, but the situation points to an ongoing security problem.

The reason for the recall? The devices can be remotely “hacked” to increase activity or reduce battery life, potentially endangering patients. According to reports, a significant portion of the pacemakers are likely to be installed in Australian patients.

Read More: Australia’s car industry needs cybersecurity rules to deal with the hacking threat

Yet the qualities that make remotely-accessible human implants desirable – namely, low cost, low maintenance batteries, small size, remote access – also make securing such devices a serious challenge.

Three key issues hold back cyber-safety:

- Most embedded devices don’t have the memory or power to support proper cryptographic security, encryption or access control.

- Doctors and patients prefer convenience and ease of access over security control.

- Remote monitoring, an invaluable feature of embedded devices, also makes them vulnerable.

The Abbott’s situation

A recall of Abbott’s pacemakers, per the FDA, would not involve surgery. Instead, the device’s firmware could be updated with a doctor.

The vulnerability of the pacemaker appears to be that someone with “commercially-available equipment” could send commands to the pacemaker, changing its settings and software. The “patched” version prevents this – it only allows authorised hardware and software tools to send commands to the device.

Abbott’s has downplayed the risks, insisting that none of the 465,000 devices have been reported as compromised.

But fears about cybersecurity attacks on individual medical devices are nothing new.

Medical devices are now part of the “internet of things” (IoT), where small battery-powered sensors combined with embedded and customised computers and radio communications (technologies such as Wi-Fi, Bluetooth, NFC) are finding uses in areas where cybersecurity has not previously been considered.

This clash of worlds brings particular challenges.

1. Power versus security

Most embedded medical devices don’t currently have the memory, processing power or battery life to support proper cryptographic security, encryption or access control.

For example, using HTTPS (a way of encrypting web traffic to prevent eavesdropping) rather than HTTP, according to Carnegie Mellon researchers, can increase the energy consumption of some mobile phones by up to 30% because of the loss of proxies.

Conventional cryptography suites (the algorithms and keys used to prove identity and keep transmissions secret) are designed for computers, and involve complex mathematical operations beyond the power of small, cheap IoT devices.

An emerging solution is to move the cryptography into dedicated hardware chips, but this raises the cost.

The US National Institute of Standards and Technology (NIST) is also developing “light-weight” cryptographic suites designed for low-powered IoT devices.

2. Convenience versus security

Doctors and patients don’t expect to always have to log into these medical devices. The prospect of having to keep usernames, passwords and encryption keys handy and safe is contrary to how they plan to use them.

No one expects to have to log into their toaster or fridge, either. Fortunately the pervasiveness of smart phones, and their use as interfaces to “smart” IoT devices, is changing users’ behaviour on this front.

When your pacemaker fails and the ambulance arrives, however, will you really have the time (or ability) to find the device serial number and authentication details to give to the paramedics?

3. Remote monitoring versus security

Surgical implants present clear medical risks when they need to be removed or replaced. For this reason, remote monitoring is undoubtedly a life-saving technology for patients with these devices.

Patients are no longer reliant on the low battery “buzz” warning, and if the device malfunctions, its software can be smoothly updated by doctors.

Unfortunately, this remote control feature creates a whole new type of vulnerability. If your doctor can remotely update your software, so can others.

Securing devices in the future

The security of connected, embedded medical devices is a “wicked” problem, but solutions are on the horizon.

We can expect low-cost cryptographic hardware chips and standardised cryptographic suites designed for low-power, low-memory and low-capability devices in the future.

Read More: Choose better passwords with the help of science

Perhaps we can also expect a generation who are used to logging into everything they touch, and will have ways of authenticating themselves to their devices easily and securely, but we’re not there yet.

![]() In the interim, we can only assess the risks and make measured decisions about how to protect ourselves.

In the interim, we can only assess the risks and make measured decisions about how to protect ourselves.

James H. Hamlyn-Harris, Senior Lecturer, Computer Science and Software Engineering, Swinburne University of Technology

This article was originally published on The Conversation. Read the original article.

First published: 4 September 2017, 14:51 IST